You can have a fast server, a strong content delivery network (CDN) and careful optimization, but one problem still shows up again and again: the first visitors after a deploy, cache purge or server restart experience a slower website.

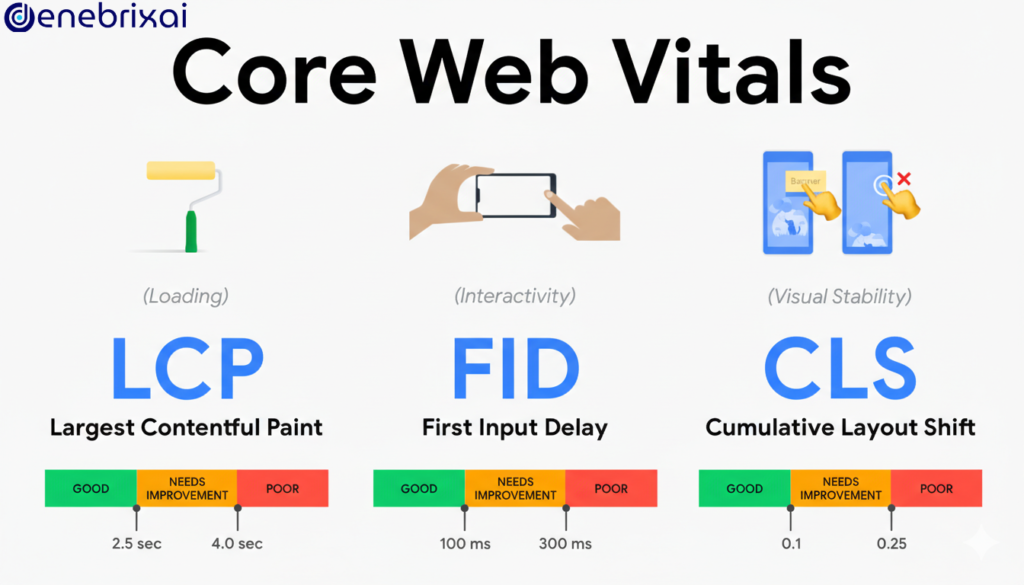

The reason is simple: your cache is empty, so every request has to hit the origin, run database queries and render everything from scratch. That hurts Time to First Byte (TTFB), Largest Contentful Paint (LCP), Core Web Vitals and, over time, your search engine optimization (SEO).

Warmup cache requests solve this by preloading key pages and APIs before real users arrive. An automated process visits the important routes so the cache is already warm when traffic starts.

This guide explains what warmup cache requests are, how they work, when to use them and how to include them in a modern performance and SEO strategy.

What Is a Warmup Cache Request?

A warmup cache request is a deliberate HTTP request that your system sends, not a real user, with one clear goal: fill your caches before anyone visits your site.

Instead of waiting for visitors to slowly populate the cache, you ask a deployment script, cron job or internal tool to hit important URLs right after a new deployment, a cache purge or invalidation, a server or container restart, or before a planned traffic spike or campaign.

Those warmup requests move through your normal stack: CDN, reverse proxy, application server and database. Each response is stored in the relevant caches. When real users arrive, they see fast, cached content instead of slow cold responses.

Warmup cache requests are one way to implement cache warming. You can combine them with other patterns such as lazy loading, write-through caching, and prefetching.

Cold Cache, Warm Cache and Prefetching

To design a good cache warming strategy, it’s useful to distinguish a few related concepts first.

| Concept | What it means |

| Cold cache | Cache is empty or expired; all requests go to the origin |

| Warm cache | Popular data is already cached; responses are faster and more stable |

| Warmup cache request | Automated request that preloads data into cache before users arrive |

| Prefetching | Loading what the user is likely to need next based on navigation |

| Lazy warming | Filling cache only when there is a miss, then possibly loading related data |

Warmup cache requests focus on system readiness. You prepare the system so that the first user experiences the same speed as the hundredth.

Prefetching and lazy warming focus more on individual journeys. They react to or predict what a specific visitor will need next.

In practice, fast websites usually combine these ideas instead of relying on only one technique.

Why Warmup Cache Requests Matter for Performance and SEO

From a user’s point of view, there is no “it will be fast after a few visits”. People remember the experience they get the first time. Recent data suggests that around 47 percent of consumers expect a website to load in two seconds or less, and about 40 percent will leave if it takes longer than three seconds to load.

Warmup cache requests help performance and SEO in several ways:

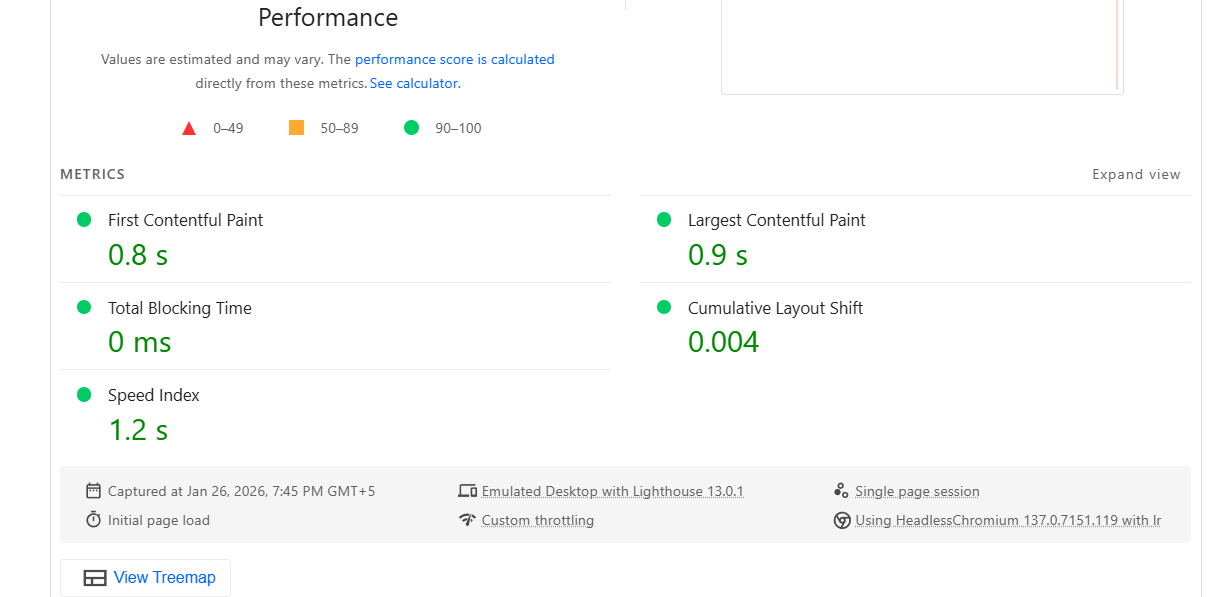

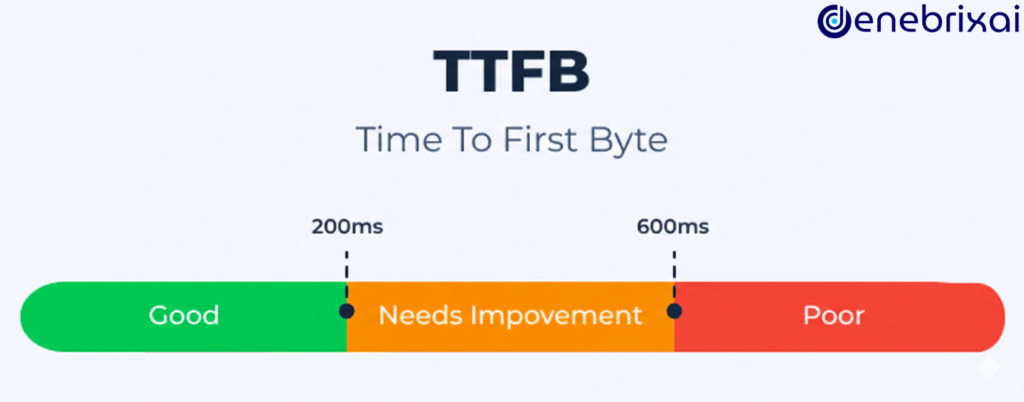

Better Time to First Byte (TTFB)

With a warm cache, your CDN or reverse proxy can respond in milliseconds, rather than waiting for the origin. This is especially helpful for users who are far from your primary server.

Improved Largest Contentful Paint (LCP)

Faster HTML and pre-generated image variants help the main content render quickly. A better LCP strengthens your Core Web Vitals and supports higher rankings.

More Stable Core Web Vitals

Without warmup, metrics may look good most of the day but drop right after deployments or incidents. Warmup helps keep these numbers consistent across the entire release cycle.

Higher Conversion and Lower Bounce Rate

Slow first loads damage trust, especially on e-commerce and lead generation pages. A warm cache removes that unlucky first visit effect. Studies show that e-commerce sites loading in about 1 second can convert several times better than those taking 4 seconds, which illustrates how costly slow pages can be.

Less Deployment Risk

You can deploy more often and with more confidence when you know key routes will be hot before you send real traffic to them.

Warmup cache requests do not fix heavy code, inefficient queries or huge images. They make sure the optimizations you already have in place are visible from the very first visitor.

How Warmup Cache Requests Move Through the Stack

Warmup cache requests need to follow the same path as real users. If they do not, you may warm the wrong layer or skip the cache entirely.

A simple flow looks like this:

Warmup Job Sends a Request

A script, deployment hook or internal service sends an HTTP GET to a URL, for example: /, /category/shoes or /api/homepage.

Request Hits the CDN

The CDN routes the request to the closest edge location. The edge node checks its local cache for a matching key. If it is a miss, it forwards the request to the origin.

Reverse Proxy and Application Handle the Request

Your load balancer or reverse proxy sends the request to an application server. The application runs code, calls the database and any other services, then builds a response.

Response gets caching headers

The application sets headers such as “Cache-Control,” “ETag,” or “Surrogate-Control.” These headers tell the CDN and other caches how long to store the response and under which conditions.

Caches Store the Result

The CDN edge stores the response. Reverse proxies, in-memory caches and database caches may also store partial responses or query results.

Later, when a real user requests the same URL with the same relevant headers, the CDN sees a cache hit, the request does not reach your origin and TTFB and LCP are both much lower. This is why correct cache keys and headers are critical. If you warm one variant and most users hit a slightly different variant, you still see cold behavior in production.

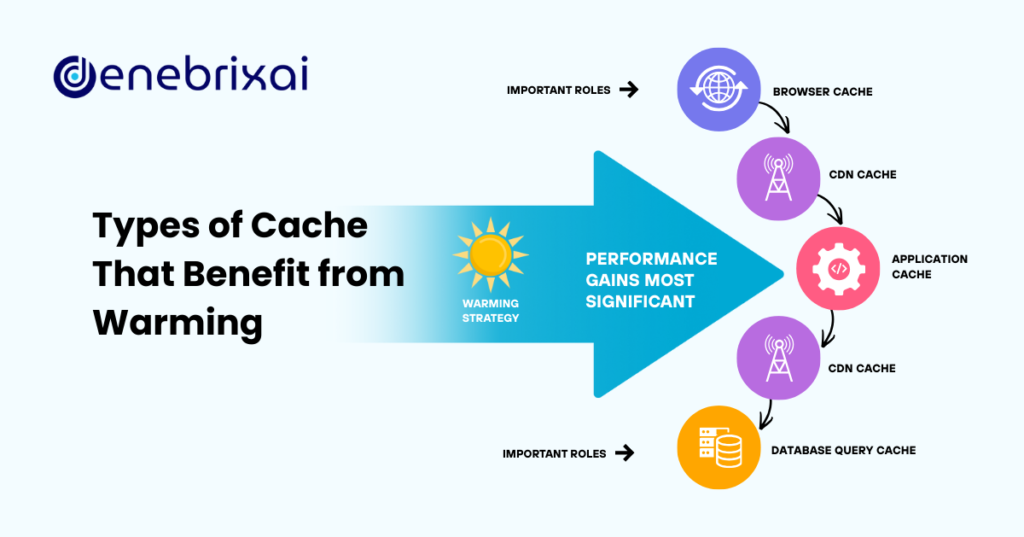

Which Caches and Content Should You Warm?

You do not need to warm every URL. Trying to warm the entire site usually wastes time and traffic.

Focus on the parts of your stack and content that give you the biggest performance and SEO benefit.

Key Cache Layers

- CDN cache for HTML pages, images and other static assets

- Reverse proxy or gateway cache for common API responses

- Application cache for rendered templates or fragments

- Database cache for heavy, repeated queries

High Value Content Types

Good starting points include the homepage and main landing pages, high-traffic category or listing pages, product and offer pages used in paid campaigns and email, login pages or dashboards that are expensive to render, and API endpoints used by your single-page application (SPA) or mobile app on initial load.

Use your analytics and access logs to guide you. Export your top pages by traffic, revenue or leads, group similar URLs where possible, for example one of each key type, and build the warmup list based on real data instead of guessing.

For very dynamic content, keep warmup narrow and use shorter cache lifetimes. It is better to serve slightly slower, fresh data than a very fast but outdated view.

Designing a Warmup Cache Roadmap

You can add warmup cache requests gradually. There is no need to build a perfect system from the start.

Stage one: Simple Manual Warmup

In the first stage, create a short list of 10 to 50 critical URLs. After each deploy, run a small script or even use a browser to visit them. Then check your CDN or reverse proxy to confirm that those URLs now register as cache hits. This small step already improves worst-case performance after deployments.

Stage two: Scripted Warmup in CI or CD

In the second stage, move warmup into your deployment pipeline. Use a script that reads a URL list from a file, database or analytics export, and add small delays or concurrency limits so you do not overload your origin. If you operate in several regions, you can also run separate warmup scripts for each region or environment. At this point, warmup becomes automatic and consistent for every release.

Stage Three: Data-Driven Warmup

In the third stage, generate warmup lists from analytics and logs on a regular schedule. Rank URLs by traffic, conversion rate or revenue impact, and warm only the top slice, for example the top 100 or top 500 URLs. You can then run warmup shortly before planned campaigns, send warmup traffic from different regions and measure how long warmed content stays hot at each edge location.

Measuring Effectiveness and Tuning Your Strategy

Warmup cache requests are worth the effort only if they improve real outcomes. You should track at least these metrics.

Cache hit ratio

Measure how many requests are served from cache instead of the origin. Pay special attention to the first minutes after deployments and cache purges.

Time to First Byte (TTFB)

Compare TTFB for key URLs before and after warmup. Look closely at results right after a release, which is when cold starts usually appear.

Largest Contentful Paint (LCP)

Use synthetic tests and real user monitoring to check whether LCP stays within your targets for first visits, not just for repeat visits.

Origin Load

Watch CPU usage, memory, database queries and outbound traffic on your origin during and after deployments. Effective warmup should reduce sharp spikes.

Error Rates

Confirm that warmed URLs return 200 OK and do not hide problems behind cached errors or misconfigured routes.

If you are not seeing improvement, adjust which URLs you warm, how often you run warmup jobs, the cache headers and time to live values you use, and how many regions or edge locations you target.

Common Mistakes and How to Avoid Them

Warmup cache requests are simple to understand, but there are several common traps to avoid.

Overloading Your Own Origin

Sending thousands of warmup requests at once can resemble a self-inflicted denial-of-service attack. Always limit concurrency and spread requests over time.

Warming the Wrong Variant

If cache keys include cookies, headers or query parameters, your warmup requests must mirror real user behavior. Otherwise, you warm data that almost nobody uses.

Caching Personalized or Sensitive Data

Do not warm pages that contain user-specific data in a shared cache. Use proper cache-control headers and separate routes or APIs for personalized content.

Using Warmup as a Band-Aid

Warmup will not fix slow database queries, heavy scripts or uncompressed images. Use it to support good performance work, not to hide underlying problems.

Ignoring Cache Invalidation

If you warm content but never clear or refresh it correctly, users may see outdated pages for too long. Pair cache warming with a clear invalidation and refresh strategy.

Alternatives and Complementary Patterns

Warmup cache requests work best as part of a broader caching and performance plan. You can combine them with patterns like these.

Cache Aside (Lazy Loading)

The application checks the cache first. On a miss, it loads data from the database, returns the result and writes it into the cache for future requests.

Write-Through Caching

When you write to the database, you also write the same data into cache. This keeps hot keys up to date without waiting for reads.

Read-Through Caching

The application talks to the cache layer, and the cache system knows how to fetch fresh data from the origin if there is a miss, then store it.

Snapshot and Restore

Some cache technologies can persist cached data to disk and reload it on restart. This reduces cold start windows after failures or restarts.

Rolling Deployments

Instead of restarting your entire fleet at once, you roll out changes gradually. Some nodes stay warm and keep serving traffic while new nodes warm up in the background.

Serverless-Specific Techniques

For serverless platforms, you can pair cache warmup with provisioned concurrency, scheduled triggers and smarter routing to reduce cold start latency for key functions.

You do not need to use every pattern. Choose what fits your stack and business, then combine those patterns with targeted warmup cache requests on your most important routes.

Quick Checklist and Next Steps

If you want a straightforward way to start, use this checklist.

- List your top landing pages, product pages and key APIs.

- Create a warmup script that hits those URLs after each deployment.

- Add and tune cache headers and time-to-live (TTL) values on those responses.

- Add rate limiting or delays inside the script to protect your origin.

- Measure cache hit ratio, TTFB and LCP before and after warmup.

- Refine the URL list, timing and regions based on the results you see.

Warmup cache requests are not the most glamorous optimization, but they solve a very real problem: the first visitors after a change should not suffer a slow site. Combined with strong caching rules, solid Core Web Vitals work and ongoing SEO, they help your website feel reliably fast from the very first load.

FAQs

Do small websites really need warmup cache requests?

Not always. If your traffic is low and your pages are simple, regular visits might be enough to warm the cache naturally. Warmup cache requests become more useful as your traffic, page complexity and deployment frequency grow.

How many URLs should I put in my warmup list?

Start small, for example 20 to 100 URLs. Focus on the homepage, key category pages, top product or offer pages and the APIs used on initial load. Expand only if you see clear benefits.

How often should I run warmup jobs?

The most important times are after deployments, cache purges and before planned campaigns. Running warmup continuously is rarely needed. Align warmup with real changes and expected spikes.

Will warmup cache requests affect my analytics?

Yes, they can. Warmup traffic looks like real traffic unless you filter it out. Use a special user-agent string, header, IP range, or query parameter and exclude that traffic in your analytics configuration.

Is it safe to warm pages with dynamic or personalized content?

Shared caches should not store user-specific information. Warm the public, non-personalized versions of pages and keep private data behind APIs or routes that are not cached globally.

Can warmup cache requests help reduce server costs?

They can. A higher cache hit ratio means fewer expensive calls to your origin and database during busy periods. Over time, that can reduce the need for extra capacity and make scaling easier to plan.